Kubenete Concepts

| Concept | Description |

|---|---|

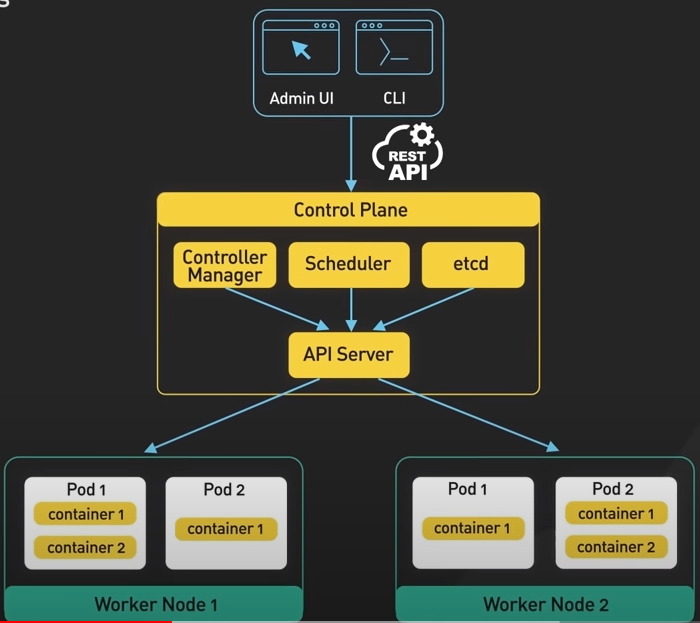

| Control Plane | Responsible for the state of the cluster |

| Worker node | run the containerized application workloads |

| Pod | the smallest deployable units in Kubernetes |

| A pod hosts one or more containers, | |

| and provides shared storage and networking for them |

| Control Plane | Description |

|---|---|

| Controller Manager | running controllers that manage the state of the cluster |

ReplicationController, DeploymentController |

|

| Scheduler | Scheduling pods onto the worker nodes in the cluster |

| etcd | a distributed key-value store, stores the cluster’s persistent state |

| API Server | primary interface between control plane & the rest of the cluster |

| Worker node | Description |

|---|---|

| kubelet | a daemon runs on each worker node, communicating with the control plane |

| receives the control plane about which pods to run on the node | |

| and ensures that the desired state of the pods is maintained | |

| kube-proxy | network proxy runs on each worker node, routing traffic to the correct |

| pods. provides load balancing for the pods & ensures the traffic is distributed | |

| evenly across the pods | |

| container runtime | runs the containers on the worker node, pulling image form registry, |

| start & stops a container & manage the container’s resources |

Configuration Type

- All-in-One Single-Node Installation In this setup, all the control plane and worker components are installed and running on a single-node. While it is useful for learning, development, and testing, it is not recommended for production purposes.

- Single-Control Plane and Multi-Worker Installation In this setup, we have a single-control plane node running a stacked etcd instance. Multiple worker nodes can be managed by the control plane node.

- Single-Control Plane with Single-Node etcd, and Multi-Worker Installation In this setup, we have a single-control plane node with an external etcd instance. Multiple worker nodes can be managed by the control plane node.

- Multi-Control Plane and Multi-Worker Installation In this setup, we have multiple control plane nodes configured for High-Availability (HA), with each control plane node running a stacked etcd instance. The etcd instances are also configured in an HA etcd cluster and multiple worker nodes can be managed by the HA control plane.

- Multi-Control Plane with Multi-Node etcd, and Multi-Worker Installation In this setup, we have multiple control plane nodes configured in HA mode, with each control plane node paired with an external etcd instance. The external etcd instances are also configured in an HA etcd cluster, and multiple worker nodes can be managed by the HA control plane. This is the most advanced cluster configuration recommended for production environments.

Container Images

| Container Image | Supported Architectures |

|---|---|

| registry.k8s.io/kube-apiserver:v1.27.1 | amd64, arm, arm64, ppc64le, s390x |

| registry.k8s.io/kube-controller-manager:v1.27.1 | amd64, arm, arm64, ppc64le, s390x |

| registry.k8s.io/kube-proxy:v1.27.1 | amd64, arm, arm64, ppc64le, s390x |

| registry.k8s.io/kube-scheduler:v1.27.1 | amd64, arm, arm64, ppc64le, s390x |

| registry.k8s.io/conformance:v1.27.1 | amd64, arm, arm64, ppc64le, s390x |

Workloads

DeploymentandReplicaSet(replacing the legacy resource ReplicationController). Deployment is a good fit for managing a stateless application workload on your cluster, where any Pod in the Deployment is interchangeable and can be replaced if needed.StatefulSetlets you run one or more related Pods that do track state somehow. For example, if your workload records data persistently, you can run a StatefulSet that matches each Pod with aPersistentVolume. Your code, running in the Pods for that StatefulSet, can replicate data to other Pods in the same StatefulSet to improve overall resilience.DaemonSetdefines Pods that provide facilities that are local to nodes. Every time you add a node to your cluster that matches the specification in a DaemonSet, the control plane schedules a Pod for that DaemonSet onto the new node. Each pod in a DaemonSet performs a job similar to a system daemon on a classic Unix / POSIX server. A DaemonSet might be fundamental to the operation of your cluster, such as a plugin to run cluster networking, it might help you to manage the node, or it could provide optional behavior that enhances the container platform you are running.JobandCronJobprovide different ways to define tasks that run to completion and then stop. You can use a Job to define a task that runs to completion, just once. You can use a CronJob to run the same Job multiple times according a schedule.

Create ConfigMap

kubectl create configmap <map-name> <data-source>

## Create the local directory:

mkdir -p configure-pod-container/configmap/

# Download the sample files into `configure-pod-container/configmap/` directory

wget https://kubernetes.io/examples/configmap/game.properties -O configure-pod-container/configmap/game.properties

wget https://kubernetes.io/examples/configmap/ui.properties -O configure-pod-container/configmap/ui.properties

# use properties

kubectl create configmap game-config --from-file=configure-pod-container/configmap/

kubectl describe configmaps game-config

kubectl get configmaps game-config -o yaml

# use env file

kubectl create configmap game-config-env-file --from-env-file=kube/configmap/game-env-file.properties

## literal values

kubectl create configmap special-config --from-literal=special.how=very --from-literal=special.type=charm

## delete

kubectl delete configmap

## Define container environment variables with data from multiple ConfigMaps

kubectl create -f https://kubernetes.io/examples/configmap/configmaps.yaml

Create Secret

# use raw data

kubectl create secret generic db-user-pass \

--from-literal=username=admin \

--from-literal=password='S!B\*d$zDsb='

# use source files

kubectl create secret generic db-user-pass \

--from-file=./username.txt \

--from-file=./password.txt

kubectl get secret db-user-pass -o jsonpath='{.data}'

echo 'UyFCXCpkJHpEc2I9' | base64 --decode

# create a TLS Secret

kubectl create secret tls my-tls-secret \

--cert=path/to/cert/file \

--key=path/to/key/file

QoS class

- Guaranteed, For every Container in the Pod, the CPU limit must have & equal the CPU request

- Burstable, At least one Container in the Pod has a memory or CPU request or limit

- BestEffort, the Containers in the Pod must not have any memory or CPU limits or requests.

accessModes

ReadWriteOncewhich means the volume can be mounted as read-write by a single NodeReadWriteOncePodthe volume can be mounted as read-write by a single PodReadOnlyManythe volume can be mounted as read-only by many nodes.ReadWriteManythe volume can be mounted as read-write by many nodes.