Docker

[CORE]

# 1. run an empty container

docker run -id --name base node:lts-alpine

# 2. go inside, plumb & digging, make sure service running smoothly

docker exec -ti base bash

# 3. make `base` container as a base container

docker commit base base

# Configuration changes (if any)

# For example, setting up SSH keys, configuring Git, etc.

# RUN ...

# Commit the changes to create a new base image

# Use 'docker commit' after running a container from this image and making changes

# Example:

docker run --name my-temp-container -it my-minimal-image

# ... make changes ...

docker commit my-temp-container my-configured-base-image

general commands

DOCKERFILE

FROM- every dockerfile starts with from command. in the from command, we will mention base image for the docker image.

MAINTAINER- maintainer command is used to represent the author of the docker file.

RUN- the run command is used to run any shell commands. these commands will run on top of the base image layer. run commands executed during the build time. you can use this command any number of times in dockerfile.

CMD- the cmd command doesn’t execute during the build time it will execute after the creation of the container.

there can be only one cmd command in dockerfile. if you add more than one cmd commands the last one will be

executed and remaining all will be skipped. whatever you are mentioning commands with cmd command in dockefile

can be overwritten with docker run command. if there is entrypint command in the dockerfile the cmd command

will be executed after entry point command. with cmd command you can pass arguments to entrypoint command.

cmd command you can use in two types one is as executable for and another one is parameters form

parameters form:

CMD [“param1″,”param2”]this param1 and param2 will be passed to entrypoint command as a arguments Example:

ENTRYPOINT ['./main.sh']

CMD ["postgress"]

in the above example, postgress is the first argument to entrypoint command

this is like running the shell script with one argument. decodingdevops.sh postgress

executable form:

CMD [“python”, “app.py”]

python app.py will be run in the container after the creation of the container.

After the creation of the image if you run the container with docker run

so the cmd commands in dockerfile can be overwritten with docker run commands.

EXPOSE- after creating the container, the container will listen on this port

ENV- this command is used to set environment variables in the container.

COPYCOPY <SRC> <DEST>it will copy the files and directories from the host machine to the container. if the destination does not exist. it will create the directory.

COPY . /usr/src/app

here dot(.) means all files in host machine dockerfile directory, will be copied into container /usr/src/copy directory.

ADDADD <SRC> <DEST>it will copy the files and directories from the host machine to the container and its support copy the files from remote URLs.ENTRYPOINT- The first command that will execute in the container is entrypoint command. Entrypoint command will be executed before the cmd command. If you mentioned more than one, only the last one will be executed and remaining all will be skipped. cmd command paraments are arguments to entrypoint command.

USER- this command will set user id (uid) or username when running the image

WORKDIR- It is just like Linux cd command. If you mention any path after workdir the shell will be changed into this directory. The next mentioned commands like run,cmd,entrypoint commands will be executed in this directory.

WORKDIR /devops

RUN npm install

here npm install command will run on devops directory.

Ubuntu Dockerfile

FROM ubuntu:14.04

RUN \

sed -i 's/# \(.*multiverse$\)/\1/g' /etc/apt/sources.list && \

apt-get update && \

apt-get -y upgrade && \

apt-get install -y build-essential && \

apt-get install -y software-properties-common && \

apt-get install -y byobu curl git htop man unzip vim wget && \

rm -rf /var/lib/apt/lists/*

# Add files.

ADD root/.bashrc /root/.bashrc

ADD root/.gitconfig /root/.gitconfig

ADD root/.scripts /root/.scripts

# Set environment variables.

ENV HOME /root

# Define working directory.

WORKDIR /root

# Define default command.

CMD ["bash"]

Node.js Dockerfile

FROM dockerfile/python

RUN \

cd /tmp && \

wget http://nodejs.org/dist/node-latest.tar.gz && \

tar xvzf node-latest.tar.gz && \

rm -f node-latest.tar.gz && \

cd node-v* && \

./configure && \

CXX="g++ -Wno-unused-local-typedefs" make && \

CXX="g++ -Wno-unused-local-typedefs" make install && \

cd /tmp && \

rm -rf /tmp/node-v* && \

npm install -g npm && \

printf '\n# Node.js\nexport PATH="node_modules/.bin:$PATH"' >> /root/.bashrc

# Define working directory.

WORKDIR /data

# Define default command.

CMD ["bash"]

CMD vs ENTRYPOINT

Docker has a default entrypoint which is /bin/sh -c but does not have a default command.

When you run docker like this: docker run -i -t ubuntu bash the entrypoint is the default /bin/sh -c, the image is ubuntu and the command is bash.

The command is run via the entrypoint. i.e., the actual thing that gets executed is /bin/sh -c bash. This allowed Docker to implement RUN quickly by relying on the shell’s parser.

Later on, people asked to be able to customize this, so ENTRYPOINT and --entrypoint were introduced.

Everything after the image name, ubuntu in the example above, is the command and is passed to the entrypoint. When using the CMD instruction, it is exactly as if you were executing

docker run -i -t ubuntu <cmd>

The parameter of the entrypoint is <cmd>.

You will also get the same result if you instead type this command docker run -i -t ubuntu: a bash shell will start in the container because in the ubuntu Dockerfile a default CMD is specified:

CMD ["bash"].

As everything is passed to the entrypoint, you can have a very nice behavior from your images. @Jiri example is good, it shows how to use an image as a “binary”. When using ["/bin/cat"] as entrypoint and then doing docker run img /etc/passwd, you get it, /etc/passwd is the command and is passed to the entrypoint so the end result execution is simply /bin/cat /etc/passwd.

Another example would be to have any cli as entrypoint. For instance, if you have a redis image, instead of running docker run redisimg redis -H something -u toto get key, you can simply have ENTRYPOINT ["redis", "-H", "something", "-u", "toto"] and then run like this for the same result: docker run redisimg get key.

CMD VS RUN

RUN is an image build step, the state of the container after a RUN command will be committed to the container image. A Dockerfile can have many RUN steps that layer on top of one another to build the image.

CMD is the command the container executes by default when you launch the built image. A Dockerfile will only use the final CMD defined. The CMD can be overridden when starting a container with docker run $image $other_command.

ENTRYPOINT is also closely related to CMD and can modify the way a container starts an image.

Docker logs

# find Docker Container Logs file Location

docker inspect --format='' container_id

# find the logs of docker container

docker logs container_id

# docker container logs with timestamp

docker logs container_id --timestamps

# find docker container logs since particular date

docker logs container_id --since YYYY-MM-DD

# find Docker container logs since particular time

docker logs container_id --since YYYY-MM-DDTHH:MM

# Tail the last N lines of logs

docker logs container_id --tail N

# follow the docker container logs (or)To see live logs

docker logs container_id --follow

# docker logs to file

# all the logs of every docker container is stored in

# /var/lib/docker/containers/container_id/container_id-json.log

docker logs container > /tmp/stdout.log

docker logs container 2> /tmp/stdout.log

docker login [OPTIONS] [SERVER]

docker login --username foo --password pwd registry.cn-shanghai.aliyuncs.com

## Error saving credentials: error storing credentials - err: exit status 1, out: Cannot autolaunch D-Bus without X11 $DISPLAY

# Ubuntu/debian, create a gpg2 key, then create the password store using the gpg userid

sudo apt-get install pass gpg2

gpg2 --gen-key

pass init $gpg_id

# Fedora/RHEL

sudo yum install pass gnupg2

gpg2 --gen-key

pass init $gpg_id

Build Images

nodejs base image

# Use Alpine Linux as the base image

FROM alpine:latest

# Install necessary packages

RUN apk update

RUN apk --no-cache add \

git \

nodejs \

npm \

openssh \

vim \

curl

# puppeteer support

ENV CHROME_BIN="/usr/bin/chromium-browser"\

PUPPETEER_SKIP_CHROMIUM_DOWNLOAD="true"

RUN apk add --no-cache chromium --repository=http://dl-cdn.alpinelinux.org/alpine/v3.19/main

# Set up a non-root user (optional but recommended for security)

RUN adduser -D myuser

USER myuser

# Set the working directory

WORKDIR /app

# Entry point (optional)

CMD ["sh"]

puppeteer

FROM node:lts-alpine

RUN apk add --no-cache \

chromium \

nss \

freetype \

harfbuzz \

ca-certificates \

ttf-freefont

ENV PUPPETEER_SKIP_CHROMIUM_DOWNLOAD=true \

PUPPETEER_EXECUTABLE_PATH=/usr/bin/chromium-browser

RUN addgroup -S pptruser && adduser -S -g pptruser pptruser \

&& mkdir -p /home/pptruser/Downloads /app \

&& chown -R pptruser:pptruser /home/pptruser \

&& chown -R pptruser:pptruser /app

USER pptruser

COPY ./ /app

WORKDIR /app

RUN yarn install

EXPOSE 3000

CMD ["npm", "run", "start"]

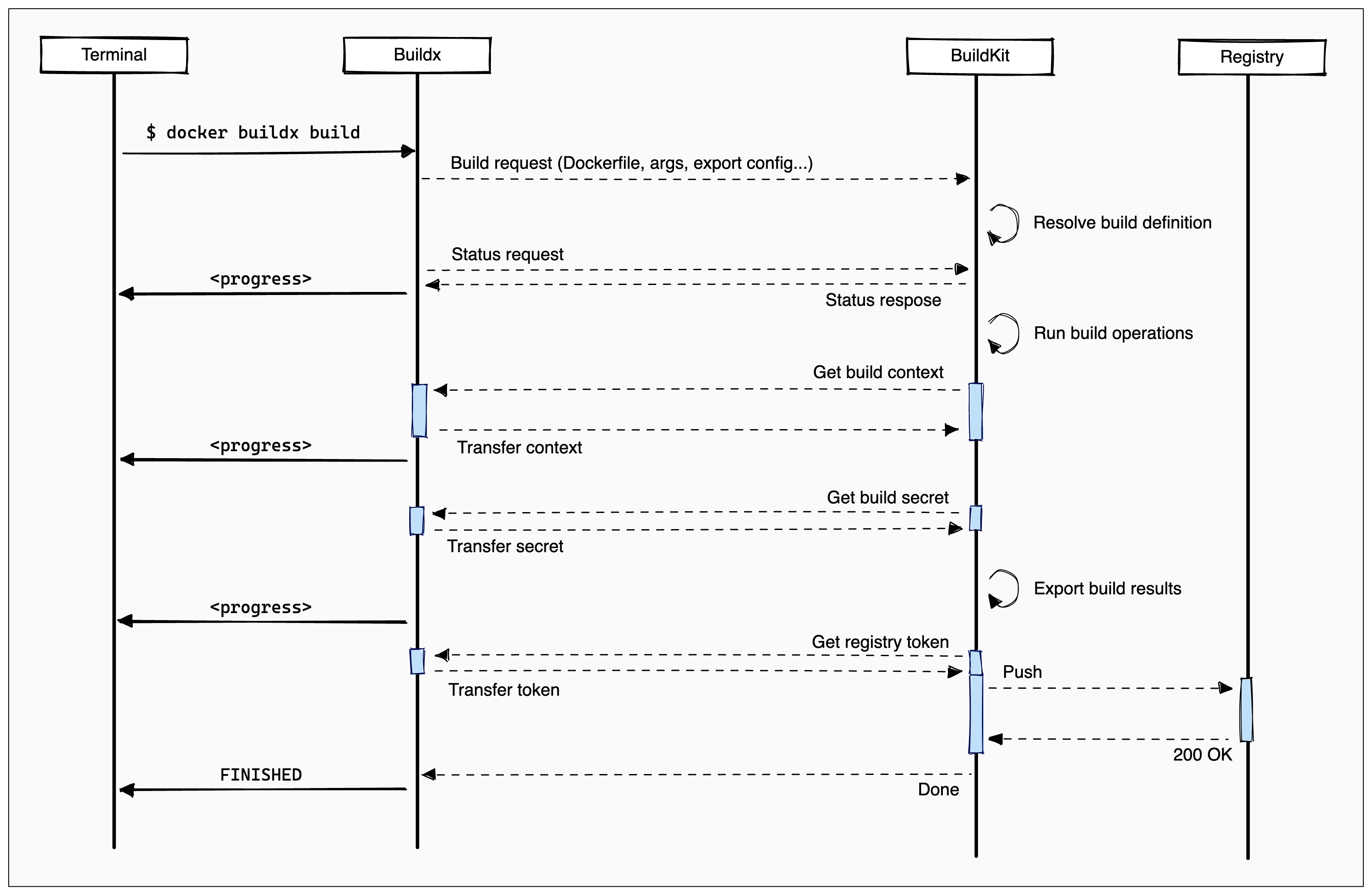

create multi-platform image

docker buildx create --usedocker buildx build --platform=linux/amd64,linux/arm64 --push -t ci_registry/repo_group/repo_name:tag . --no-cache

Aliyun registry

# 1. Login in Aliyun Docker Registry

docker login --username=yourname registry.cn-hangzhou.aliyuncs.com

# 2. tag local image & push it to registry

docker tag [ImageId] registry.cn-shanghai.aliyuncs.com/namespace/groupname:[镜像版本号]

docker push registry.cn-shanghai.aliyuncs.com/namespace/groupname:[镜像版本号]

# interal ci push

docker tag 37bb9c63c8b2 registry-vpc.cn-shanghai.aliyuncs.com/acs/agent:0.7-dfb6816

ssl configuration

openssl version

# OpenSSL 1.1.1n

sudo apt-get install letsencrypt

sudo certbot certificates

sudo certbot certonly --manual --preferred-challenges=dns -d *.example.com

## /etc/letsencrypt/live/example.com/fullchain.pem

## /etc/letsencrypt/live/example.com/privkey.pem

docker run --entrypoint htpasswd httpd:2 -Bbn username userpass > auth/htpasswd

registry:

image: "registry:2.7"

container_name: registry

restart: unless-stopped

environment:

- REGISTRY_HTTP_ADDR=0.0.0.0:443

- REGISTRY_STORAGE_DELETE_ENABLED=true

- REGISTRY_AUTH=htpasswd

- REGISTRY_AUTH_HTPASSWD_REALM="Registry Realm"

- REGISTRY_HTTP_TLS_CERTIFICATE=/certs/domain.pem

- REGISTRY_HTTP_TLS_KEY=/certs/domain.pem

- REGISTRY_AUTH_HTPASSWD_PATH=/auth/htpasswd

volumes:

- $PWD/ssl:/certs

- $PWD/htpasswd:/auth/htpasswd

- /data/registry:/var/lib/registry

networks:

net:

ipv4_address: 172.16.8.3

registry-ui:

image: "jc21/registry-ui"

container_name: registry-ui

restart: unless-stopped

environment:

- REGISTRY_HOST=registry:443

- REGISTRY_SSL=true

- REGISTRY_DOMAIN=registry.domain.com

- REGISTRY_STORAGE_DELETE_ENABLED=true

networks:

net:

ipv4_address: 172.16.8.4

{

"allow-nondistributable-artifacts": ["registry.example.com"]

}

docker install on ubuntu 22.04 LTS

for pkg in docker.io docker-doc docker-compose docker-compose-v2 podman-docker containerd runc; do sudo apt-get remove $pkg; done

# Add Docker's official GPG key:

sudo apt-get update

sudo apt-get install ca-certificates curl gnupg

sudo install -m 0755 -d /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

sudo chmod a+r /etc/apt/keyrings/docker.gpg

# Add the repository to Apt sources:

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \

$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

# install latest version

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

# install specific version

# List the available versions:

apt-cache madison docker-ce | awk '{ print $3 }'

VERSION_STRING=5:24.0.0-1~ubuntu.22.04~jammy

sudo apt-get install docker-ce=$VERSION_STRING docker-ce-cli=$VERSION_STRING containerd.io docker-buildx-plugin docker-compose-plugin

# verify installation

sudo docker run hello-world

manual install

- https://download.docker.com/linux/ubuntu/dists/jammy/pool/stable/amd64/

sudo dpkg -i ./containerd.io_<version>_<arch>.deb \

./docker-ce_<version>_<arch>.deb \

./docker-ce-cli_<version>_<arch>.deb \

./docker-buildx-plugin_<version>_<arch>.deb \

./docker-compose-plugin_<version>_<arch>.deb

sudo service docker start

sudo docker run hello-world

Manage Docker as a non-root user

# Create the docker group.

sudo groupadd docker

# Add your user to the docker group.

sudo usermod -aG docker $USER

# Log out and log back in so that your group membership is re-evaluated.

# Verify that you can run docker commands without sudo.

docker ps

docker-compose install

sudo curl -L "https://github.com/docker/compose/releases/download/v2.23.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

sudo mv /usr/local/bin/docker-compose /usr/bin/docker-compose

sudo chmod +x /usr/bin/docker-compose

docker credientials

echo "aws01us02" | docker secret create aws_access_key_id -

echo "topsecret9b78c6" | docker secret create aws_secret_access_key -

echo "us-east-1" | docker secret create aws_region -

docker secret ls

ID NAME DRIVER CREATED UPDATED

i4g62kyuy80lnti5d05oqzgwh aws_access_key_id 5 minutes ago 5 minutes ago

uegit5plcwodp57fxbqbnke7h aws_secret_access_key 3 minutes ago 3 minutes ago

fxbqbnke7hplcwodp57fuegit aws_region About a minute ago About a minute ago

# Add the secrets to the command line when you run Docker.

docker run -d -p 3000:3000 --name grafana \

-e "GF_DEFAULT_INSTANCE_NAME=my-grafana" \

-e "GF_AWS_PROFILES=default" \

-e "GF_AWS_default_ACCESS_KEY_ID__FILE=/run/secrets/aws_access_key_id" \

-e "GF_AWS_default_SECRET_ACCESS_KEY__FILE=/run/secrets/aws_secret_access_key" \

-e "GF_AWS_default_REGION__FILE=/run/secrets/aws_region" \

-v grafana-data:/var/lib/grafana \

grafana/grafana-enterprise

downgrade the container version on linux

sudo apt install containerd=1.5.2-0ubuntu1~18.04.3

sudo apt install ./docker.io_19.03.6-0ubuntu1_18.04.3_arm64.deb

sudo apt-mark hold docker.io containerd

sudo apt-mark unhold docker.io containerd

dial unix /var/run/docker.sock: connect: permission denied

## reboot not necessary

sudo setfacl --modify user:<username>:rw /var/run/docker.sock

## reboot required

sudo usermod -aG docker $USER

sudo reboot

alpine image dns not resloved

#You can solve the problem by installing the dhclient package. For the last time enable Google's DNS servers by runing for the last time:

sudo su "echo 'nameserver 8.8.8.8' > /etc/resolv.conf"

#Then run this cocktail of commands:

sudo apk update && sudo apk upgrade && sudo apk add dhclient

#In order to get the fresh packages and install the `dhclient`. Then configure the /etc/dhcp/dhclient.conf and put the following:

```conf

option rfc3442-classless-static-routes code 121 = array of unsigned integer 8;

send host-name = gethostname();

request subnet-mask, broadcast-address, time-offset, routers,

domain-name, domain-name-servers, domain-search, host-name,

dhcp6.name-servers, dhcp6.domain-search, dhcp6.fqdn, dhcp6.sntp-servers,

netbios-name-servers, netbios-scope, interface-mtu,

rfc3442-classless-static-routes, ntp-servers;

prepend domain-name-servers 8.8.8.8, 8.8.4.4;

# And restart the networking:

sudo rc-service networking restart

# Optionally you can confirm that works if you run:

sudo reboot

# In either case you can confirm that dns is resolved by pinging the google.

ping google.com

Uninstall

# Uninstall the Docker Engine, CLI, containerd, and Docker Compose packages:

sudo apt-get purge docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin docker-ce-rootless-extras

# To delete all images, containers, and volumes:

sudo rm -rf /var/lib/docker

sudo rm -rf /var/lib/containerd

# Remove source list and keyrings

sudo rm /etc/apt/sources.list.d/docker.list

sudo rm /etc/apt/keyrings/docker.asc